MM1 : Apple’s Multimodal LLM pretraining revealed

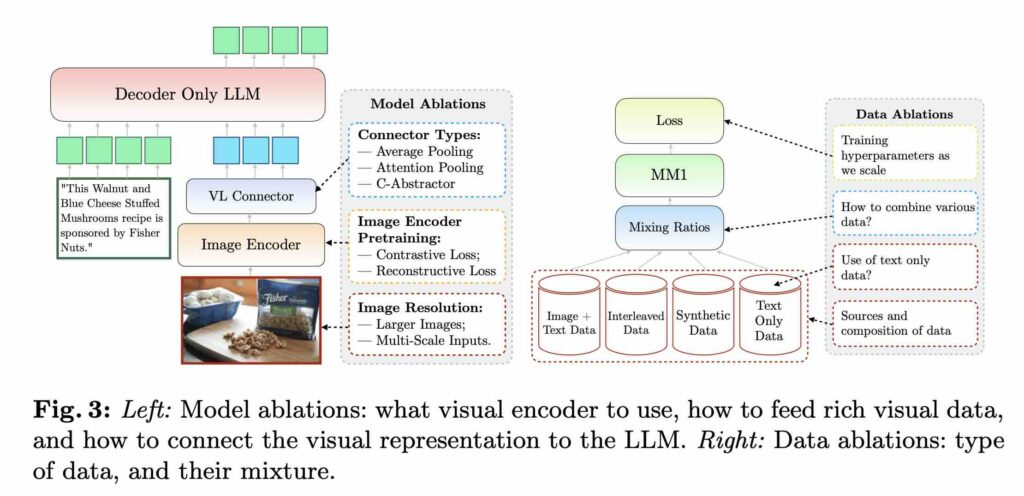

On 14th March 2024, a team of researchers from Apple released Multimodal LLM MM1, their findings on Multimodal LLM Pre-training and fine-tuning. In this work the importance of various architecture components and data choices in Large Language Models has been studied. Through thorough analysis of the image encoder, vision language connector and different pre-training data options, several […]